Netsparker Web Application Security Scanner version 4. The main highlight of this new version is the new fully automated Form Authentication mechanism; it does not require you to record anything, supports 2 factor authentication and other authentication mechanisms that require a one time code to work out of the box.

The below is a list of features highlights of the new Netsparker Web Application Security Scanner version 4.

Configuring New Web Application Security Scans Just Got Easier

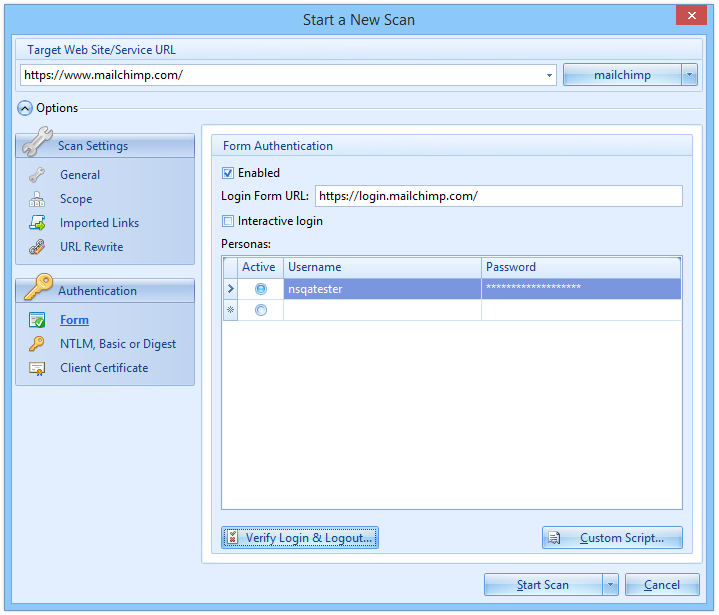

This is the first thing you will notice when you launch the new version of Netsparker Desktop; a more straightforward and easier to use New Scan dialog. Easy to use software has become synonymous with Netsparker’s scanners and in this version we raised the bar again, giving the opportunity to many users to launch web security scans even if they are not that familiar with web application security.

As seen in the above screenshot all the generic scan settings you need are ergonomically placed in the right position, allowing you to quickly configure a new web application security scan. All of the advanced scan settings, such as HTTP connection options have been moved to scan policies.

Revamped Form Authentication Support to Scan Password Protected Areas

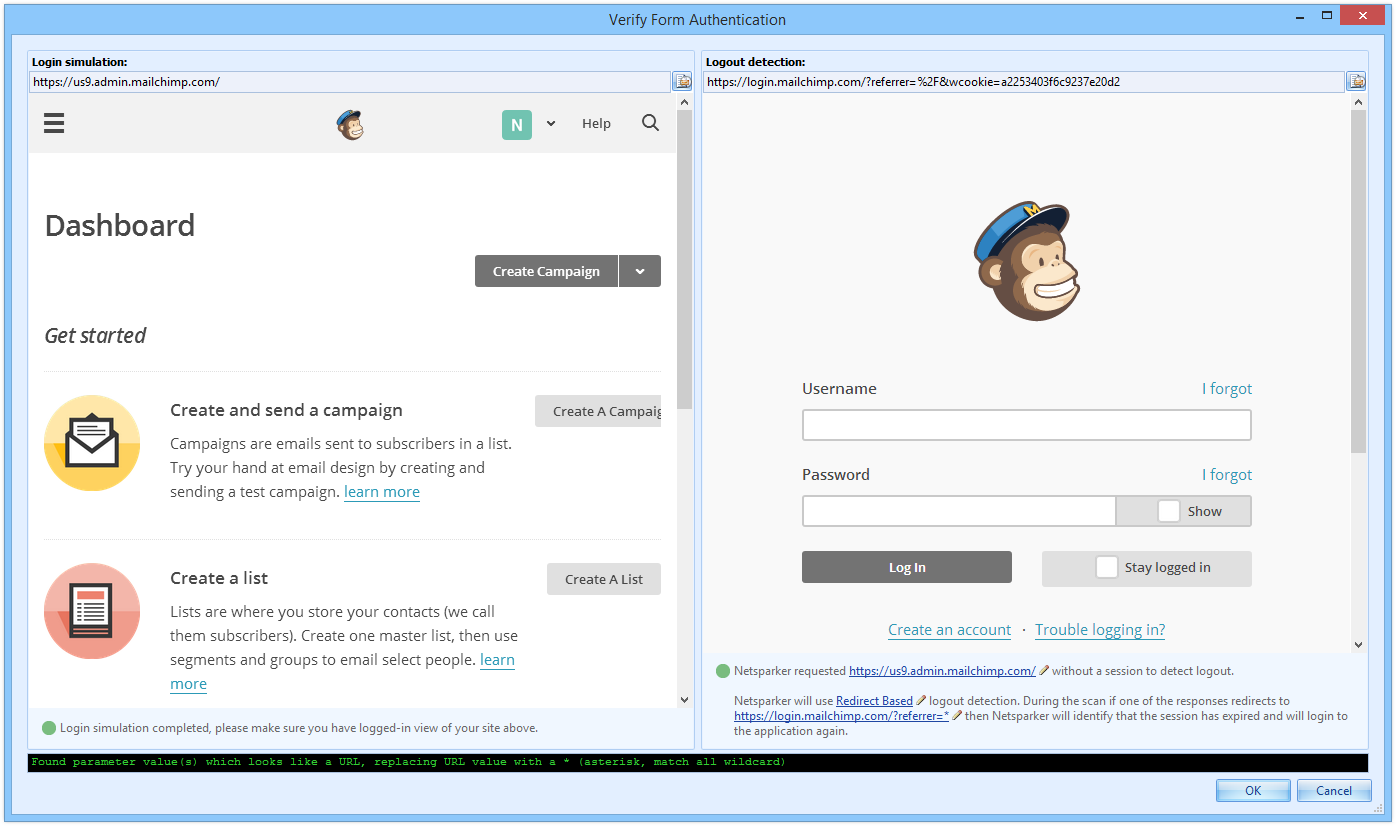

The new fully automated form authentication mechanism of Netsparker Desktop emulates a real user login, therefore even if tokens or other one time parameters are used by the web application an out of the box installation of the scanner can still login in to the password protected area and scan it. For example in the below example Netsparker is being used to login to the MailChimp website.

Once you enter the necessary details, mainly the login form URL and credentials you can click Verify Login & Logout to verify that the scanner can automatically login and identify a logged in session, as shown in the below screenshot.

You do not have to record any login macros because the new mechanism is all based on DOM. You just have to enter the login form URL, username and password and it will automatically login to the password protected section. We have tested the new automated form authentication mechanism on more than 300 live websites and can confirm that while using an out of the box setup, it works on 85% of the websites. 13% of the remaining edge cases can be fixed by writing 2-5 lines of JavaScript code with Netsparker’s new JavaScript custom script support. Pretty neat, don’t you think? The below are just a few of the login forms we tested.

The new Form Authentication mechanism also supports custom scripts which can be used to override the scanner’s behaviour, or in rare cases where the automated login button detection is not working. The custom scripting language has been changed to JavaScript because it is easier and many more users are familiar with it.

Out of the Box Support for Two-Factor Authentication and One Time Passwords

The new Form Authentication mechanism of Netsparker Desktop can also be used to automatically scan websites which use two-factor authentication or any other type of one time passwords technologies. Very simple to configure; specify the login form URL, username and passwords and tick the option Interactive Login so a browser window automatically prompts allowing you to enter the third authentication factor during a web application security scan.

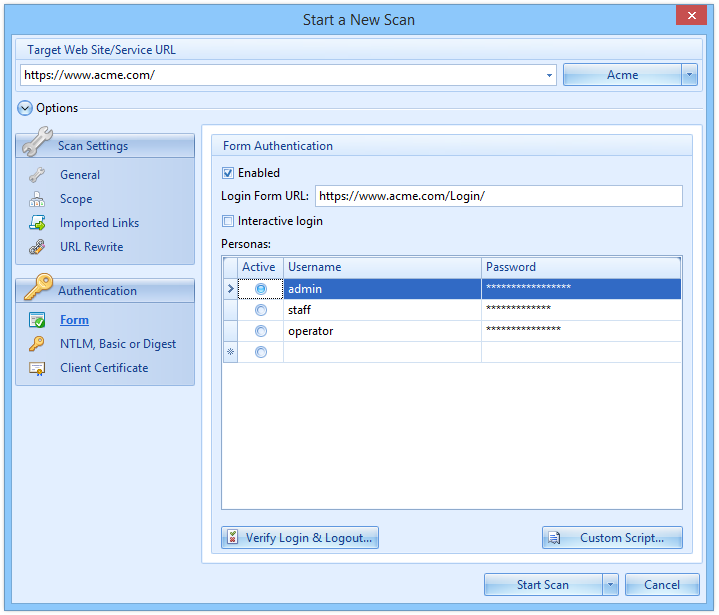

Ability to Emulate Different User Roles During a Scan

To ensure that all possible vulnerabilities in a password protected area are identified, you should scan it using different users that have different roles and privileges. With the new form authentication mechanism of Netsparker you can do just that! When configuring the authentication details specify multiple usernames and passwords so in between scans you just have to select which credentials should be used without the need to record any new login macros or reconfiguring the scanner.

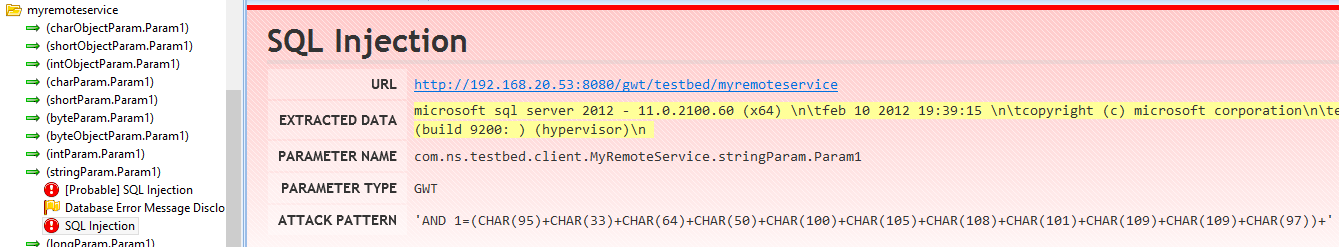

Automatically Identify Vulnerabilities in Google Web Toolkit Applications

Google Web Toolkit, also known as GWT is an open source framework that gained a lot of popularity. Nowadays many web applications are being built on it, or using features and functions from it. Since the web applications that are built with GWT heavily depend on complex JavaScript, we built a dedicated engine in Netsparker to support GWT.

This means that you can use Netsparker Desktop to automatically crawl, scan and identify vulnerabilities and security flaws in Google Web Toolkit applications.

Identify Vulnerabilities in File Upload Forms

Like with every version or build of Netsparker we release, we included a number of new security checks in this version. Though one specific web application security check that is included in this version needs more attention that the others; file upload forms vulnerabilities.

From this version onwards Netsparker Desktop will check all the file upload forms on your websites for vulnerabilities such forms are typically susceptible for, for example Netsparker tests that all proper validation checks in a file upload form work and that they cannot be bypassed by malicious attackers.

Mixed Content Type, Cross-Frame Options, CORS configuration

We also added various new web security checks mostly around HTML5 security headers. For example Netsparker now checks for X-Frame-Options usage, and possible problems in the implementation of it which can lead to Clickjacking vulnerabilities and some other security issues.

Another new check is checking the configuration of CORS headers. Finally in this category we added Mixed Content Type checks for HTTPS pages and Content Type header analysis for all of the pages.

XML External Entity (XXE) Engine

Applications that deal with XML data are particularly susceptible to XML External Entity (XXE) attacks. A successful exploitation of a XXE vulnerability allows an attacker to launch other and more grievous malicious attacks, such as code execution. Since this version, Netsparker automatically checks websites and web applications for XXE vulnerabilities.

Insecure JSONP Endpoints - Rosetta Flash & Reflected File Download Attacks

In this version we added a new security check to identify insecure JSONP endpoints and other controllable endpoints that can lead to Rosetta Flash or Reflected File Download attacks.

Even if your application is not using JSONP you can be still vulnerable to these type of attacks in other forms, hence why it is always important to scan your website with Netsparker.

Other Netsparker Desktop 4 Features and Product Improvements

The above list just highlights the most prominent features and new security checks of Netsparker Desktop version 4, the only false positive free web application security scanner. Included in this version there are also more new security checks and we also improved several existing security checks, hence the scanner’s coverage is better than ever before. Of course we also included a number of product improvements.

Since there have been a good number of improvements and changes in this version there are also some things from older versions of Netsparker which are no longer supported, such as scan profiles. Because we changed the way Netsparker saves the scan profiles, scan profiles generated with older versions of Netsparker will no longer work. Therefore I recommend you to check the

Netsparker Desktop version 4 changelog for more information on what is new, changed and improved.

![]()